While word embedding like word2vec or glove vectors have been shown to capture syntactic and semantic information of words as well as have become a standard component in many state-of-the-art NLP architectures. But their context-free nature limits their ability to represent context-dependent information.

Both Word2vec and Glove do not solve the problems like:

- By default, Word2Vec or Glove model has one representation per word. A vector can try to accumulate all contexts but that just ends up generalizing all the contexts to at least some extent, hence precision of each context is compromised. This is especially a problem for words which have very different contexts. This might lead to one context, over powering others.

- How to learn the representation for out-of-vocabulary words.

- How to learn long term context dependency (not just co-occurrence but context).

For example, there will be only one Word2Vec representation for ‘apple’ the company and ‘apple’ the fruit.

Much recently, successful efforts were made in learning contextualized vector representation of words after researchers started learning such representations (embeddings) from training deep language models. The technique demonstrated in this blog-post tackles with all the above problems with traditional word vector representations.

Embedding from Langauge Model (ELMO)

In March 2018, ELMO came out as one of the great breakthroughs in NLP space. This paper went on to award with outstanding paper at NAACL.

The flow of ELMO deep neural network architecture is shown below.

ELMo language model is a fairly complex architecture. An exact configuration of ELMO architecture (medium size) can be seen from this json file. First, we convert each token to an appropriate representation using character embeddings. It allows us to pick up on morphological features that word-level embeddings could miss. This also eliminates the issue of out of vocabulary words. This character embedding representation is then run through a convolution layer using some number of filters, followed by a max-pool layer. Using convolution filters allows us to pick up on n-gram features that build more powerful representations, Finally this representation is passed through a 2-layer highway network before being provided as the input to Bi-LSTM language model layers.

ELMO is a combination of the intermediate layer representations in the biLM. More generally, we compute a task specific weighting of all biLM layers as shown in the above figure. For inclusion in a downstream model, ELMo collapses all layers i.e. input layer (x), first Bi-LSTM output layer (h1) and second output layer(h2) into a single vector. Following is the equation to calculate the embedding of a word from trained from language model.

![]()

Now, Let’s look at how can we train ELMO on our custom data which may be of finance domain, insurance domain, health care etc in order to learn the domain specific language and contextual terminologies.

Implementation

We will clone the tensorflow implementation of ELMO from here. This framework (github repository) allows us to train a ELMO model from scratch and later dump the trained model weights in a format which can be utilized for inference.

It is better to setup a conda environment with all the necessary packages installed including tensorflow(1.2) and h5py. Also, it is obvious that it would require a GPU for faster training specially if you have huge amount of text. Follow these steps and test your installation environment for sanity.

Data-set

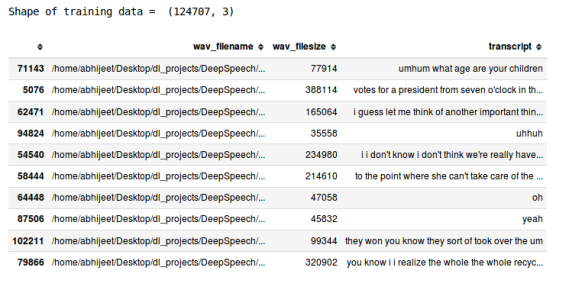

For demonstration purpose, We train a custom ELMO model from scratch on Switchboard data which is transcription of 240 hours of converstaional speech. For convinience, I have created train, test and validation csv of switchboard data-set here. Training data contains 1.24K sentence (or transcript fragments). Let’s get started with training !!.

Import

import os import pandas as pd from collections import Counter

To train and evaluate a biLM, you need to provide:

- a set of training files

- a set of heldout files

- a vocabulary file

Prepare Training Data

The training data should be randomly split into many training files, each containing one slice of the data. Each file contains pre-tokenized and white space separated text, one sentence per line. 3rd column of train.csv (data) is the transcription of respective speech fragments.

data_train = pd.read_csv("swb/swb-train.csv")

print("Shape of training data = ", data_train.shape)

data_train.sample(10)

Adding white space separated full stop to each sentence in data. There are 1.24K sentences in train.csv here.

data_train['transcript'] = data_train['transcript'] + " ." data_train['transcript'].head()

As the training requires multiple files with one text sentence per line, we will create 20K training files by writing 6 sentences per file. After running the below python snippet, we get 20K files in train directory.

if not os.path.exists("swb/train"):

os.makedirs("swb/train")

for i in range(0,data_train.shape[0],6):

text = "\n".join(data_train['transcript'][i:i+6].tolist())

fp = open("swb/train/"+str(i)+".txt","w")

fp.write(text)

fp.close()

Prepare Validation Data

Validation data is also prepared in the similar manner as training data.

data_dev = pd.read_csv("swb/swb-dev.csv")

data_dev['transcript'] = data_dev['transcript'] + " ."

if not os.path.exists("swb/dev"):

os.makedirs("swb/dev")

for i in range(0,data_dev.shape[0],6):

text = "\n".join(data_dev['transcript'][i:i+6].tolist())

fp = open("swb/dev/"+str(i)+".txt","w")

fp.write(text)

fp.close()

Preparing Vocabulary File

The vocabulary file is a a text file with one token per line. It must also include the special tokens and and

texts = " ".join(data_train['transcript'].tolist())

words = texts.split(" ")

print("Number of tokens in Training data = ",len(words))

dictionary = Counter(words)

print("Size of Vocab",len(dictionary))

sorted_vocab = ["","",""]

sorted_vocab.extend([pair[0] for pair in dictionary.most_common()])

text = "\n".join(sorted_vocab)

fp = open("swb/vocab.txt","w")

fp.write(text)

fp.close()

Train the biLM model

We are ready to train our custom biLM model now.

python bin/train_elmo.py --train_prefix='swb/train/*' --vocab_file 'swb/vocab.txt' --save_dir 'swb/checkpoint'

There are two things to remember:

- Make sure to create directory ‘swb/checkpoint’ and keep options.json file in it.

- Modify the configuration in train_elmo.py as per options.json specially n_train_tokens and n_vocab_tokens.

Evaluate the biLM model

We will evaluate our trained mode in checkpoint directory with validation set. The language models are evaluated on perplexity score. To learn more on language model perplexity one can find these (, and here) literature useful. Like training set, we will get batch perplexity for each validation batch and average perplexity of validation set.

python bin/run_test.py --test_prefix='swb/dev/*' --vocab_file 'swb/vocab.txt' --save_dir='swb/checkpoint'

Convert Tf Checkpoint to hdf5

This step is important to convert the checkpoint model to hdf5 format which can be easily used for inference.

python bin/dump_weights.py --save_dir 'swb/checkpoint' --outfile 'swb/swb_weights.hdf5'

Prediction

Now,

- Keep the dumped weights file in newly created model folder.

- Create an

options.jsonfile for the newly trained model in same folder. - It is important to always set

n_charactersto 262 after training. - Keep vocab.txt in model directory.

import os

os.environ['TF_CPP_MIN_LOG_LEVEL'] = '3'

import tensorflow as tf

import numpy as np

import scipy.spatial.distance as ds

from bilm import Batcher, BidirectionalLanguageModel, weight_layers

# Location of pretrained LM. Here we use the test fixtures.

datadir = os.path.join('swb', 'model')

vocab_file = os.path.join(datadir, 'vocab.txt')

options_file = os.path.join(datadir, 'options.json')

weight_file = os.path.join(datadir, 'swb_weights.hdf5')

# Create a Batcher to map text to character ids.

batcher = Batcher(vocab_file, 50)

# Input placeholders to the biLM.

context_character_ids = tf.placeholder('int32', shape=(None, None, 50))

# Build the biLM graph.

bilm = BidirectionalLanguageModel(options_file, weight_file)

# Get ops to compute the LM embeddings.

context_embeddings_op = bilm(context_character_ids)

# Get an op to compute ELMo (weighted average of the internal biLM layers)

elmo_context_input = weight_layers('input', context_embeddings_op, l2_coef=0.0)

# Now we can compute embeddings.

raw_context = ['Technology has advanced so much in new scientific world',

'My child participated in fancy dress competition',

'Fashion industry has seen tremendous growth in new designs']

tokenized_context = [sentence.split() for sentence in raw_context]

print(tokenized_context)

with tf.Session() as sess:

# It is necessary to initialize variables once before running inference.

sess.run(tf.global_variables_initializer())

# Create batches of data.

context_ids = batcher.batch_sentences(tokenized_context)

print("Shape of context ids = ", context_ids.shape)

# Compute ELMo representations (here for the input only, for simplicity).

elmo_context_input_ = sess.run(

elmo_context_input['weighted_op'],

feed_dict={context_character_ids: context_ids}

)

print("Shape of generated embeddings = ",elmo_context_input_.shape)

There are 3 sentences with maximum sentence length of 9 words and embedding length for each word is 256 (128 dim concatenated forward and backward). Hence, the shape of generated embeddings are (3, 9, 256).

# Computing euclidean distance between words embedding

euc_dist_bet_tech_computer = np.linalg.norm(elmo_context_input_[1,5,:]-elmo_context_input_[0,0,:])

euc_dist_bet_computer_fashion = np.linalg.norm(elmo_context_input_[1,5,:]-elmo_context_input_[2,0,:])

# Computing cosine distance between words embedding

cos_dist_bet_tech_computer = ds.cosine(elmo_context_input_[1,5,:],elmo_context_input_[0,0,:])

cos_dist_bet_computer_fashion = ds.cosine(elmo_context_input_[1,5,:],elmo_context_input_[2,0,:])

print("Euclidean Distance Comparison - ")

print("\nDress-Technology = ",np.round(euc_dist_bet_tech_computer,2),"\nDress-Fashion = ",

np.round(euc_dist_bet_computer_fashion,2))

print("\n\nCosine Distance Comparison - ")

print("\nDress-Technology = ",np.round(cos_dist_bet_tech_computer,2),"\nDress-Fashion = ",

np.round(cos_dist_bet_computer_fashion,2))

At the End

Hope it was easy to follow the tutorial. Beginners interested in training contextual language model for embedding generation can start with this application. Readers are strongly encouraged to download the data-set and check if they can reproduce the results. Readers can discuss in comments if there is a need of an explicit explanation.

Few points worth noting are:

- As ELMO is based on character encodings, it takes care of out-of-vocab words also.

- Each word in different context sentences will have different embedding which can help learning context for various text classification tasks.

- As of March, 2018, the performance of ELMo across a diverse set of six benchmark NLP tasks shows that simply adding ELMo establishes a new state-of-the-art result, with relative error reductions ranging from 6 – 20% over strong base models (claimed in paper).

- For domain specific NLP tasks like finance, insurance, heath care etc, It would be better to train an ELMO with domain specific text sentences (nearly 1 billions tokens) and use that language model instead of using pre-trained model on generic English.

If you liked the post, follow this blog to get updates about upcoming articles. Also, share it so that it can reach out to the readers who can actually gain from this. Please feel free to discuss anything regarding the post. I would love to hear feedback from you.

Happy deep learning 🙂

Good tutorial for beginners. The elmo model in this example is trained on character level. How to train elmo model on word level.

Like

With options you provided in the code. I have 2 questions!

** Please help, Why I am getting this output?

FINSIHED! AVERAGE PERPLEXITY = 50.383595

** Is the following output correct?

Euclidean Distance Comparison –

Dress-Technology = 27.07

Dress-Fashion = 22.39

Cosine Distance Comparison –

Dress-Technology = 0.81

Dress-Fashion = 0.63

Like

1. Loss function of most of language models are perplexity. In general terms, perplexity is a measure to know how good model can predict a given word ‘t’ if given a sequence of previous words say ‘t-1′,’t-2′,…’t-n’.

2. Yes, The output is definitely correct as cosine distance between Dress and Fashion is less compared to Dress-Technology. Cosine calculation is (1 – cosine_distance).

(https://docs.scipy.org/doc/scipy/reference/generated/scipy.spatial.distance.cosine.html#scipy.spatial.distance.cosine).

Like

I am facing a runtime error with the tests and all the tests fail. And solution to this?

Like