This blog-post is the subsequent part of my previous blog-post on developing question answering system on Facebook bAbI data-set. In my previous article, the bAbI data-set was described and we have extracted features for building the model. If you have directly landed on this blog-post then I would suggest you to read the previous blog-post.

In this article, we will focus on writing python implementation of end to end memory neural network model using Keras. So, let’s start with understanding and building the model proposed by Facebook AI research in 2015 in this paper “End to End Memory Networks“.

1. Understanding Memory Network Model

To understand the end to end memory network model, let us define few memory representation vectors and its computation first.

Input memory representation

The story vectors derived from vocabulary (previous article) are converted into memory vectors of dimension ‘d’ (here, assume embedding_size ‘d’=50) computed by embedding layer. An embedding layer takes story vector (max length 68) as input and generates a fixed ‘d’ dimensional vector for each input story vector. Let us call this embedding ‘m’. Dimension of ‘m’ would be (max_story_length, embedding_size) i.e. (68,50).

Question memory representation

The query vector is also embedded (again, via another embedding layer in keras with the same dimensions as ‘d’ (here d=50) to obtain an embedding ‘q’. The embedding layer takes query vector (max length 4) as input and generates an embedding ‘q’ of ‘d’ dimension. Dimension of ‘q’ would be (query_length, embedding_size) i.e. (4,50).

Further, In the embedding space, we compute the probability vector ‘p’ i.e. match between ‘q’ and each memory ‘m’ by taking the inner product followed by a softmax function:

p = Softmax(dot(m,q))

Dimension of ‘p’ would be (max_story_length, query_length) i.e. (68,4)

Output memory representation

The story vector are also converted into memory vector of dimension query max length i.e. 4 computed by a separate embedding layer. This embedding layer takes story vector (max length 68) vector as input and generates an embedding of ‘max length=4’ dimension. Let us call this embedding ‘c’. Dimension of ‘c’ would be (max_story_length, query_length) i.e. (68,4).

The output vector or response vector ‘o’ is then calculated by sum over the transformed embedding ‘c’ , weighted by the probability vector ‘p’ calculated earlier:

o = add(c,p)

Dimension of ‘o’ would be (max_story_length, query_length) i.e. (68,4)

Final prediction

The output or response vector ‘o’ and the input vector ‘m’ are concatenated to form a result vector ‘r’ which is further passed to LSTM layer and finally to output Dense layer of vocabulary size which gives softmax probability of each word in the vocab. The word predicted with greater accuracy is considered as answer word. Dimension after concatenation would be (query_length, embedding_size + max_story_length) i.e. (4, 118).

answer = Softmax(Dense(LSTM(concat(o,q))))

or it can also be written as

answer = Softmax(W(concat(o,q)))

A pictorial diagram is shown below which showcase the above procedure of building the model.

2. Building the Model

Hope the above section gave pretty clear illustration on how answer word is predicted through the memory network model. Let us quickly build an end to end single layer memory network model.

Importing Libraries

import IPython import matplotlib.pyplot as plt import pandas as pd import keras from keras.models import Sequential, Model from keras.layers.embeddings import Embedding from keras.layers import Permute, dot, add, concatenate from keras.layers import LSTM, Dense, Dropout, Input, Activation

Config file (Parameters)

# number of epochs to run train_epochs = 100 # Training batch size batch_size = 32 # Hidden embedding size embed_size = 50 # number of nodes in LSTM layer lstm_size = 64 # dropout rate dropout_rate = 0.30

Defining the Model

# placeholders

input_sequence = Input((story_maxlen,))

question = Input((query_maxlen,))

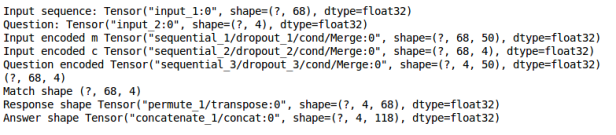

print('Input sequence:', input_sequence)

print('Question:', question)

# encoders

# embed the input sequence into a sequence of vectors

input_encoder_m = Sequential()

input_encoder_m.add(Embedding(input_dim=vocab_size,

output_dim=embed_size))

input_encoder_m.add(Dropout(dropout_rate))

# output: (samples, story_maxlen, embedding_dim)

# embed the input into a sequence of vectors of size query_maxlen

input_encoder_c = Sequential()

input_encoder_c.add(Embedding(input_dim=vocab_size,

output_dim=query_maxlen))

input_encoder_c.add(Dropout(dropout_rate))

# output: (samples, story_maxlen, query_maxlen)

# embed the question into a sequence of vectors

question_encoder = Sequential()

question_encoder.add(Embedding(input_dim=vocab_size,

output_dim=embed_size,

input_length=query_maxlen))

question_encoder.add(Dropout(dropout_rate))

# output: (samples, query_maxlen, embedding_dim)

# encode input sequence and questions (which are indices)

# to sequences of dense vectors

input_encoded_m = input_encoder_m(input_sequence)

print('Input encoded m', input_encoded_m)

input_encoded_c = input_encoder_c(input_sequence)

print('Input encoded c', input_encoded_c)

question_encoded = question_encoder(question)

print('Question encoded', question_encoded)

# compute a 'match' between the first input vector sequence

# and the question vector sequence

# shape: `(samples, story_maxlen, query_maxlen)

match = dot([input_encoded_m, question_encoded], axes=-1, normalize=False)

print(match.shape)

match = Activation('softmax')(match)

print('Match shape', match)

# add the match matrix with the second input vector sequence

response = add([match, input_encoded_c]) # (samples, story_maxlen, query_maxlen)

response = Permute((2, 1))(response) # (samples, query_maxlen, story_maxlen)

print('Response shape', response)

# concatenate the response vector with the question vector sequence

answer = concatenate([response, question_encoded])

print('Answer shape', answer)

answer = LSTM(lstm_size)(answer) # Generate tensors of shape 32

answer = Dropout(dropout_rate)(answer)

answer = Dense(vocab_size)(answer) # (samples, vocab_size)

# we output a probability distribution over the vocabulary

answer = Activation('softmax')(answer)

In the above code snippet, you can see we have two inputs : input_sequence (story) and question (query). Also, we have ‘answer’ as a final model which combines response model and encoded query as input and predicts the answer word.

3. Training the Model

The below codes complies the model and train it using input features vectors as well as labels extracted in the first part of this blog-post series.

# build the final model

model = Model([input_sequence, question], answer)

model.compile(optimizer='rmsprop', loss='categorical_crossentropy',

metrics=['accuracy'])

print(model.summary())

# start training the model

model.fit([inputs_train, queries_train],

answers_train, batch_size, train_epochs,

callbacks=[TrainingVisualizer()],

validation_data=([inputs_test, queries_test], answers_test))

# save model

model.save('model.h5')

4. Visualization

The below python function shows visualization of training and validation categorical cross entropy loss as well as train and validation accuracy over iterations. This function is used as callback function while model training.

class TrainingVisualizer(keras.callbacks.History):

def on_epoch_end(self, epoch, logs={}):

super().on_epoch_end(epoch, logs)

IPython.display.clear_output(wait=True)

pd.DataFrame({key: value for key, value in self.history.items() if key.endswith('loss')}).plot()

axes = pd.DataFrame({key: value for key, value in self.history.items() if key.endswith('acc')}).plot()

axes.set_ylim([0, 1])

plt.show()

5. Tests Results

I hope you have enjoyed the model building process till now. Let us check few of the test stories and see how the model performs in predicting the right answer to the query.

for i in range(0,10):

current_inp = test_stories[i]

current_story, current_query, current_answer = vectorize_stories([current_inp], word_idx, story_maxlen, query_maxlen)

current_prediction = model.predict([current_story, current_query])

current_prediction = idx_word[np.argmax(current_prediction)]

print(' '.join(current_inp[0]), ' '.join(current_inp[1]), '| Prediction:', current_prediction, '| Ground Truth:', current_inp[2])

print("--------------------------------------------------------------")

John travelled to the hallway . Mary journeyed to the bathroom . Where is John ? | Prediction: hallway | Ground Truth: hallway --------------------------------------------------------------------- John travelled to the hallway . Mary journeyed to the bathroom . Daniel went back to the bathroom . John moved to the bedroom . Where is Mary ? | Prediction: bathroom | Ground Truth: bathroom --------------------------------------------------------------------- John travelled to the hallway . Mary journeyed to the bathroom . Daniel went back to the bathroom . John moved to the bedroom . John went to the hallway . Sandra journeyed to the kitchen . Where is Sandra ? | Prediction: kitchen | Ground Truth: kitchen --------------------------------------------------------------------- John travelled to the hallway . Mary journeyed to the bathroom . Daniel went back to the bathroom . John moved to the bedroom . John went to the hallway . Sandra journeyed to the kitchen . Sandra travelled to the hallway . John went to the garden . Where is Sandra ? | Prediction: hallway | Ground Truth: hallway --------------------------------------------------------------------- John travelled to the hallway . Mary journeyed to the bathroom . Daniel went back to the bathroom . John moved to the bedroom . John went to the hallway . Sandra journeyed to the kitchen . Sandra travelled to the hallway . John went to the garden . Sandra went back to the bathroom . Sandra moved to the kitchen . Where is Sandra ? | Prediction: kitchen | Ground Truth: kitchen --------------------------------------------------------------------- Sandra travelled to the kitchen . Sandra travelled to the hallway . Where is Sandra ? | Prediction: hallway | Ground Truth: hallway --------------------------------------------------------------------- Sandra travelled to the kitchen . Sandra travelled to the hallway . Mary went to the bathroom . Sandra moved to the garden . Where is Sandra ? | Prediction: garden | Ground Truth: garden --------------------------------------------------------------------- Sandra travelled to the kitchen . Sandra travelled to the hallway . Mary went to the bathroom . Sandra moved to the garden . Sandra travelled to the office . Daniel journeyed to the hallway . Where is Daniel ? | Prediction: hallway | Ground Truth: hallway --------------------------------------------------------------------- Sandra travelled to the kitchen . Sandra travelled to the hallway . Mary went to the bathroom . Sandra moved to the garden . Sandra travelled to the office . Daniel journeyed to the hallway . Daniel journeyed to the office . John moved to the hallway . Where is Sandra ? | Prediction: office | Ground Truth: office --------------------------------------------------------------------- Sandra travelled to the kitchen . Sandra travelled to the hallway . Mary went to the bathroom . Sandra moved to the garden . Sandra travelled to the office . Daniel journeyed to the hallway . Daniel journeyed to the office . John moved to the hallway . John travelled to the bathroom . John journeyed to the office . Where is Daniel ? | Prediction: office | Ground Truth: office ---------------------------------------------------------------------

Reference

[1] End to End Memory Networks

[2] Towards AI-Complete Question Answering: A Set of Prerequisite Toy Tasks

[3] Example code : bAbI_rnn.py from keras-team/keras GitHub repository

Final Thoughts

Hope it was easy to follow this blog-post series on developing question and answering system for a toy task on bAbI data-set. If you are totally unaware of neural networks or Q&A systems then I would suggest to first go through the basics of Neural Networks and read more on Q&A system from the abundant material available online. Before signing off, I would like to put few more thoughts on this post and for future:

- The data-set is a synthetic data-set with limited data (10k stories only) and has a very limited vocabulary of 22 unique words.

- The prediction task is based on single supporting fact. It would be great to check the performance on reasoning tasks where prediction is based on multiple facts.

- A more generic Q&A system should allow machine reading comprehension (MRC) abilities where system should be able to predict span of words (sentence) from reading a paragraph (based on multiple supporting facts).

- Readers who are encouraged to explore Q&A systems more can check an open source SQUAD2.0 data-set which is much more rigorous task and would require further research.

You can get the full python implementation (jupyter notebook) from GitHub link here.

This blog-post series was intended to perform experiments and demonstrate a fair idea of Q&A systems. Personally, I am more interested in MRC application where the answer to every question is a segment of text, or span, from the corresponding reading passage, or the question might be unanswerable. Hopefully, I will be writing solution to MRC task on Squad data-set in near future. Stay tuned !!.

If you liked the post, follow this blog to get updates about upcoming articles. Also, share it so that it can reach out to the readers who can actually gain from this. Please feel free to discuss anything regarding the post. I would love to hear feedback from you.

Happy deep learning 🙂

Hi, I’m building a model in which there is one question and 4 options out of which one is correct I want to know how should I edit your code such that it works in this situation.

My other question is if I’m not using your data have let’s say Wikipedia dataset and questions will your model will work in that situation as well?

Like

hello sir,

you have explained qa system very well..very informative…please abhijeet sir..i need your email id..i have some query regarding QA system

Like