This blog-post presents building a demonstration of emotion recognition from the detected bounded face in a real time video or images.

Introduction

An face emotion recognition system comprises of two step process i.e. face detection (bounded face) in image followed by emotion detection on the detected bounded face. The following two techniques are used for respective mentioned tasks in face recognition system.

- Haar feature-based cascade classifiers : It detects frontal face in an image well. It is real time and faster in comparison to other face detector. This blog-post uses an implementation from Open-CV.

- Xception CNN Model (Mini_Xception, 2017) : We will train a classification CNN model architecture which takes bounded face (48*48 pixels) as input and predicts probabilities of 7 emotions in the output layer.

Data-set

One can download the facial expression recognition (FER) data-set from Kaggle challenge here. The data consists of 48×48 pixel gray scale images of faces. The faces have been automatically registered so that the face is more or less centered and occupies about the same amount of space in each image. The task is to categorize each face based on the emotion shown in the facial expression in to one of seven categories (0=Angry, 1=Disgust, 2=Fear, 3=Happy, 4=Sad, 5=Surprise, 6=Neutral).

The training set consists of 35,888 examples. train.csv contains two columns, “emotion” and “pixels”. The “emotion” column contains a numeric code ranging from 0 to 6, inclusive, for the emotion that is present in the image. The “pixels” column contains a string surrounded in quotes for each image. The contents of this string a space-separated pixel values in row major order

Loading FER Data-set

The below code loads the data-set and pre-process the images for feeding it to CNN model. There are two definitions in the code snippet here:

1. def load_fer2013 : It reads the csv file and convert pixel sequence of each row in image of dimension 48*48. It returns faces and emotion labels.

2. def preprocess_input: It is a standard way to pre-process images by scaling them between -1 to 1. Images is scaled to [0,1] by dividing it by 255. Further, subtraction by 0.5 and multiplication by 2 changes the range to [-1,1]. [-1,1] has been found a better range for neural network models in computer vision problems.

import pandas as pd

import cv2

import numpy as np

dataset_path = 'fer2013/fer2013.csv'

image_size=(48,48)

def load_fer2013():

data = pd.read_csv(dataset_path)

pixels = data['pixels'].tolist()

width, height = 48, 48

faces = []

for pixel_sequence in pixels:

face = [int(pixel) for pixel in pixel_sequence.split(' ')]

face = np.asarray(face).reshape(width, height)

face = cv2.resize(face.astype('uint8'),image_size)

faces.append(face.astype('float32'))

faces = np.asarray(faces)

faces = np.expand_dims(faces, -1)

emotions = pd.get_dummies(data['emotion']).as_matrix()

return faces, emotions

def preprocess_input(x, v2=True):

x = x.astype('float32')

x = x / 255.0

if v2:

x = x - 0.5

x = x * 2.0

return x

faces, emotions = load_fer2013()

faces = preprocess_input(faces)

xtrain, xtest,ytrain,ytest = train_test_split(faces, emotions,test_size=0.2,shuffle=True)

5 expression samples of each of the 7 emotions in the data-set can be seen below.

Originally in the dataset provided in kaggle link, each image is given as string which is a row 1×2304 which is 48×48 image stored as row vector. The strings in the .csv files can be converted into images using the code in github link here.

Training CNN model : Mini Xception

Here comes the exciting architecture which is comparatively small and achieves almost state-of-art performance of classifying emotion on this data-set. The below architecture was proposed by Octavio Arragia et al. in this paper.

One can notice that the center block is repeated 4 times in the design. This architecture is different from the most common CNN architecture like one used in the blog-post here. Common architectures uses fully connected layers at the end where most of parameters resides. Also, they use standard convolutions. Modern CNN architectures such as Xception leverage from the combination of two of the most successful experimental assumptions in CNNs: the use of residual modules and depth-wise separable convolutions.

There are various techniques that can be kept in mind while building a deep neural network and is applicable in most of the computer vision problems. Below are few of those techniques which are used while training the CNN model below.

- Data Augmentation : More data is generated using the training set by applying transformations. It is required if the training set is not sufficient enough to learn representation. The image data is generated by transforming the actual training images by rotation, crop, shifts, shear, zoom, flip, reflection, normalization etc.

-

Kernel_regularizer : It allows to apply penalties on layer parameters during optimization. These penalties are incorporated in the loss function that the network optimizes. Argument in convolution layer is nothing but

L2 regularisationof the weights. This penalizes peaky weights and makes sure that all the inputs are considered. - BatchNormalization : It normalizes the activation of the previous layer at each batch, i.e. applies a transformation that maintains the mean activation close to 0 and the activation standard deviation close to 1. It addresses the problem of internal covariate shift. It also acts as a regularizer, in some cases eliminating the need for Dropout. It helps in speeding up the training process.

- Global Average Pooling : It reduces each feature map into a scalar value by taking the average over all elements in the feature map. The average operation forces the network to extract global features from the input image.

- Depthwise Separable Convolution : These convolutions are composed of two different layers: depth-wise convolutions and point-wise convolutions. Depth-wise separable convolutions reduces the computation with respect to the standard convolutions by reducing the number of parameters. A very nice and visual explanation of the difference between standard and depth-wise separable convolution is given in the paper.

Below python codes implements the above architecture in Keras.

from keras.callbacks import CSVLogger, ModelCheckpoint, EarlyStopping

from keras.callbacks import ReduceLROnPlateau

from keras.preprocessing.image import ImageDataGenerator

from sklearn.model_selection import train_test_split

from keras.layers import Activation, Convolution2D, Dropout, Conv2D

from keras.layers import AveragePooling2D, BatchNormalization

from keras.layers import GlobalAveragePooling2D

from keras.models import Sequential

from keras.layers import Flatten

from keras.models import Model

from keras.layers import Input

from keras.layers import MaxPooling2D

from keras.layers import SeparableConv2D

from keras import layers

from keras.regularizers import l2

import pandas as pd

import cv2

import numpy as np

# parameters

batch_size = 32

num_epochs = 110

input_shape = (48, 48, 1)

verbose = 1

num_classes = 7

patience = 50

base_path = 'models/'

l2_regularization=0.01

# data generator

data_generator = ImageDataGenerator(

featurewise_center=False,

featurewise_std_normalization=False,

rotation_range=10,

width_shift_range=0.1,

height_shift_range=0.1,

zoom_range=.1,

horizontal_flip=True)

# model parameters

regularization = l2(l2_regularization)

# base

img_input = Input(input_shape)

x = Conv2D(8, (3, 3), strides=(1, 1), kernel_regularizer=regularization, use_bias=False)(img_input)

x = BatchNormalization()(x)

x = Activation('relu')(x)

x = Conv2D(8, (3, 3), strides=(1, 1), kernel_regularizer=regularization, use_bias=False)(x)

x = BatchNormalization()(x)

x = Activation('relu')(x)

# module 1

residual = Conv2D(16, (1, 1), strides=(2, 2), padding='same', use_bias=False)(x)

residual = BatchNormalization()(residual)

x = SeparableConv2D(16, (3, 3), padding='same', kernel_regularizer=regularization, use_bias=False)(x)

x = BatchNormalization()(x)

x = Activation('relu')(x)

x = SeparableConv2D(16, (3, 3), padding='same', kernel_regularizer=regularization, use_bias=False)(x)

x = BatchNormalization()(x)

x = MaxPooling2D((3, 3), strides=(2, 2), padding='same')(x)

x = layers.add([x, residual])

# module 2

residual = Conv2D(32, (1, 1), strides=(2, 2), padding='same', use_bias=False)(x)

residual = BatchNormalization()(residual)

x = SeparableConv2D(32, (3, 3), padding='same', kernel_regularizer=regularization, use_bias=False)(x)

x = BatchNormalization()(x)

x = Activation('relu')(x)

x = SeparableConv2D(32, (3, 3), padding='same', kernel_regularizer=regularization, use_bias=False)(x)

x = BatchNormalization()(x)

x = MaxPooling2D((3, 3), strides=(2, 2), padding='same')(x)

x = layers.add([x, residual])

# module 3

residual = Conv2D(64, (1, 1), strides=(2, 2),padding='same', use_bias=False)(x)

residual = BatchNormalization()(residual)

x = SeparableConv2D(64, (3, 3), padding='same',kernel_regularizer=regularization,use_bias=False)(x)

x = BatchNormalization()(x)

x = Activation('relu')(x)

x = SeparableConv2D(64, (3, 3), padding='same',kernel_regularizer=regularization,use_bias=False)(x)

x = BatchNormalization()(x)

x = MaxPooling2D((3, 3), strides=(2, 2), padding='same')(x)

x = layers.add([x, residual])

# module 4

residual = Conv2D(128, (1, 1), strides=(2, 2),padding='same', use_bias=False)(x)

residual = BatchNormalization()(residual)

x = SeparableConv2D(128, (3, 3), padding='same',kernel_regularizer=regularization,use_bias=False)(x)

x = BatchNormalization()(x)

x = Activation('relu')(x)

x = SeparableConv2D(128, (3, 3), padding='same',kernel_regularizer=regularization,use_bias=False)(x)

x = BatchNormalization()(x)

x = MaxPooling2D((3, 3), strides=(2, 2), padding='same')(x)

x = layers.add([x, residual])

x = Conv2D(num_classes, (3, 3), padding='same')(x)

x = GlobalAveragePooling2D()(x)

output = Activation('softmax',name='predictions')(x)

model = Model(img_input, output)

model.compile(optimizer='adam', loss='categorical_crossentropy',metrics=['accuracy'])

model.summary()

# callbacks

log_file_path = base_path + '_emotion_training.log'

csv_logger = CSVLogger(log_file_path, append=False)

early_stop = EarlyStopping('val_loss', patience=patience)

reduce_lr = ReduceLROnPlateau('val_loss', factor=0.1, patience=int(patience/4), verbose=1)

trained_models_path = base_path + '_mini_XCEPTION'

model_names = trained_models_path + '.{epoch:02d}-{val_acc:.2f}.hdf5'

model_checkpoint = ModelCheckpoint(model_names, 'val_loss', verbose=1,save_best_only=True)

callbacks = [model_checkpoint, csv_logger, early_stop, reduce_lr]

model.fit_generator(data_generator.flow(xtrain, ytrain,batch_size),

steps_per_epoch=len(xtrain) / batch_size,

epochs=num_epochs, verbose=1, callbacks=callbacks,

validation_data=(xtest,ytest))

The model gives 65-66% accuracy on validation set while training the model. The CNN model learns the representation features of emotions from the training images. Below are few epochs of training process with batch size of 64.

Testing the Model

On Images

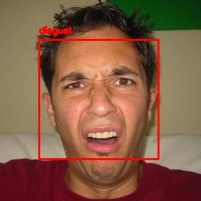

While performing tests on the trained model, I felt that model detects the emotion of faces as neutral if the expressions are not made distinguishable enough. The model gives probabilities of each emotion class in the output layer of trained mini_xception CNN model. Below are the 18 facial expressions taken from google images to validate the trained model.

In order to detect emotion in a single image, one can execute the python code below.

from keras.preprocessing.image import img_to_array

from keras.models import load_model

import imutils

import cv2

import numpy as np

import sys

# parameters for loading data and images

detection_model_path = 'haarcascade_files/haarcascade_frontalface_default.xml'

emotion_model_path = 'models/_mini_XCEPTION.106-0.65.hdf5'

img_path = sys.argv[1]

# hyper-parameters for bounding boxes shape

# loading models

face_detection = cv2.CascadeClassifier(detection_model_path)

emotion_classifier = load_model(emotion_model_path, compile=False)

EMOTIONS = ["angry","disgust","scared", "happy", "sad", "surprised","neutral"]

#reading the frame

orig_frame = cv2.imread(img_path)

frame = cv2.imread(img_path,0)

faces = face_detection.detectMultiScale(frame,scaleFactor=1.1,minNeighbors=5,minSize=(30,30),flags=cv2.CASCADE_SCALE_IMAGE)

if len(faces) > 0:

faces = sorted(faces, reverse=True,key=lambda x: (x[2] - x[0]) * (x[3] - x[1]))[0]

(fX, fY, fW, fH) = faces

roi = frame[fY:fY + fH, fX:fX + fW]

roi = cv2.resize(roi, (48, 48))

roi = roi.astype("float") / 255.0

roi = img_to_array(roi)

roi = np.expand_dims(roi, axis=0)

preds = emotion_classifier.predict(roi)[0]

emotion_probability = np.max(preds)

label = EMOTIONS[preds.argmax()]

cv2.putText(orig_frame, label, (fX, fY - 10), cv2.FONT_HERSHEY_SIMPLEX, 0.45, (0, 0, 255), 2)

cv2.rectangle(orig_frame, (fX, fY), (fX + fW, fY + fH),(0, 0, 255), 2)

cv2.imshow('test_face', orig_frame)

cv2.imwrite('test_output/'+img_path.split('/')[-1],orig_frame)

if (cv2.waitKey(2000) & 0xFF == ord('q')):

sys.exit("Thanks")

cv2.destroyAllWindows()

On Video

In order to detect emotion in webcam, one can execute the python code here.

References

The demonstration codes has been ingested from following sources.

[1] https://github.com/oarriaga/face_classification

[2] https://github.com/omar178/Emotion-recognition

[3] Real-time Convolutional Neural Networks for Emotion and Gender Classification

If you liked the post, follow this blog to get updates about upcoming articles. Also, share it so that it can reach out to the readers who can actually gain from this. Please feel free to discuss anything regarding the post. I would love to hear feedback from you.

Happy deep learning 🙂

How much time will take time to train? For me one ecpoh is taking nearly 2Hr of time?

Like

Is it fine to reduce the no of Epoch????

Like

To achieve an accuracy of 65%, you may have to run it to 90 iterations atleast.

Like

Do you have GPU ?

On GPU it would take less time. It’s not advisable to run on CPU. It would take long time.

Like

Okay, thanks . I am trying on CPU.

Like

If you are CPU, I would suggest trying it out with already trained model from https://github.com/abhijeet3922/FaceEmotion_ID/tree/master/models

“_mini_XCEPTION.106-0.65.hdf5” this model should be good to use.

Like

Hey Man Can we in anyway Incorporate Multi-threading into the Program? Each epoch is taking me nearly 350 seconds to learn. Is there any chance we could reduce that?

Liked by 1 person

Are you talking about training or testing ?

Are you running it on CPU or GPU ?

Training of DNN already executes in parallel.

Like

I am just able to get two emotions Abhijeet, one is Happy and the other is disgust with your model. Please Help

Like

The code for detection in image will be a separate python file right or will it be in the same file in which we trained the models

Like

It will be in separate file. Look at my Github repo for the same.

https://github.com/abhijeet3922/FaceEmotion_ID

Like

Many thanks for the tutorial Mr. Kumar and Hello Everyone,

I’m a beginner in the field of machine learning.

I tried to reproduce this model during two days but still have some permissions error :

PermissionError: [Errno 13] Permission denied: ‘./models/_emotion_training.log’

Can someone help please

Like

How to add speech recognition with to emotion recognition in while true loop so that it detects the emotion and has to listen what i said simultanoesly .

please help me

Like

Train process create many hdf5 files in models folder. But in the facial_emotion_image.py and real_time_video.py file we are using only one file which is mini_XCEPTION.106-0.65.hdf5. How do we use other files?

Like

Good one. This can be applied to categorize a movies or clippings. And also we can get a emotional graph of the video clipping.

Like

Hello sir,

How do you extract the features from the fer2013 dataset?? Is there any specific algorithms within convolutional neural network?

Like

Hi Vignesh,

You need to understand and read about Neural Networks.

With these models, you do not need to extract hand crafted features.

Neural netwroks learn features automatically when trained.

Like

Im using your code but I dont have the same results. It fails on most of the pictures.

And I cant run the real time detection

Traceback (most recent call last):

File “real_time_video.py”, line 49, in

for (i, (emotion, prob)) in enumerate(zip(EMOTIONS, preds)):

NameError: name ‘preds’ is not defined

I didnt change anything in your code and preds is defined on line 44

preds = emotion_classifier.predict(roi)[0]

Any help?

Like

Hi,

I remember I faced this error quite a time while i was experimenting. You would need to debug the preds variable. Looking at the code, I feel “preds” variable is getting intialized in side “if len(faces) > 0:” block.

It may be the case that faces are not getting detected in your system and hence the “preds” variable is not initialized which leads to that error.

Do comment if you are able to debug.

Like

The solution is here

https://github.com/omar178/Emotion-recognition/issues/1#issuecomment-485226615

Like

ValueError: Error when checking target: expected predictions to have shape (7,) but got array with shape (6,)

It is working in jupyter notebook (anaconda)

But not in colab and showing this error

Can you please give me the code that can run on colab also

Like

65% accuracy is too low. How to improve it to 80%

Like

Hi, I am trying to go through this tutorial, but I got an Error. It says:

FileNotFoundError: [Errno 2] No such file or directory: ‘models/_emotion_training.log’

I downloaded the complete data-set of the kaggle challenge but I cannot find a file named “_emotion_training.log” in the downloads. Is it supposed to come with the download or is it generated somewhere in the code during the process of training the CNN model?

I am thankful for every answer 🙂

Like

I found the solution. I just needed to create a new folder named “models”. That’s it.

Like

Hi ,

I am getting the same error, could you please tell me how to solve it.

Thank you.

Like

Creating a new folder named ‘models’ in the cwd solves this problem.

Like

hi, thanks for the code, I did the indentation modification but know I have these this error

Traceback (most recent call last):

File “real_time_video.py”, line 48, in

preds = emotion_classifier.predict(roi)[0]

NameError: name ‘roi’ is not defined

have you any idea how fix it?

Like

Sir i am getting error in train_emotion classifier.py

Traceback (most recent call last):

File “train_emotion_classifier.py”, line 156, in

validation_data=(xtest,ytest))

File “C:\Users\Hp\PycharmProjects\FaceDepression_ID-master\depression\lib\site-packages\keras\legacy\interfaces.py”, line 91, in wrapper

return func(*args, **kwargs)

File “C:\Users\Hp\PycharmProjects\FaceDepression_ID-master\depression\lib\site-packages\keras\engine\training.py”, line 1732, in fit_generator

initial_epoch=initial_epoch)

File “C:\Users\Hp\PycharmProjects\FaceDepression_ID-master\depression\lib\site-packages\keras\engine\training_generator.py”, line 260, in fit_generator

callbacks.on_epoch_end(epoch, epoch_logs)

File “C:\Users\Hp\PycharmProjects\FaceDepression_ID-master\depression\lib\site-packages\keras\callbacks\callbacks.py”, line 152, in on_epoch_end

callback.on_epoch_end(epoch, logs)

File “C:\Users\Hp\PycharmProjects\FaceDepression_ID-master\depression\lib\site-packages\keras\callbacks\callbacks.py”, line 702, in on_epoch_end

filepath = self.filepath.format(epoch=epoch + 1, **logs)

KeyError: ‘val_acc’

please help me to solve this error

Like

Hello Vivek,

Did you find the solution to this problem? I too am facing this problem please let me know of any work around

Like

This might be the problem when you are using newer version of keras.

Refer this. https://github.com/keras-team/keras/issues/6104#issuecomment-309579772

Try the solutions there. Kindly post it if you are able to solve.

Like

May be the issue with Keras version.

Try the solutions from similar issue raised here.

https://github.com/keras-team/keras/issues/6104

Like

i didnt uderstand how to train data where i need put jpg file?

also is pussible create it as face recognition with names and with emotions,( people multi number )

Like

How can I fix this error please

FileNotFoundError: [Errno 2] No such file or directory: ‘models/_emotion_training.log’

The dataset is imported from my Drive , i checked all folders and there is no file called _emotion_training.log

please help

Like