One of the most frequently encountered task in many ML applications is classification. From the plethora of classifiers at our disposal, Support Vector Machines (SVM) are arguably the most widely used one. Yet, the intuition behind their working and the key concepts are seldom understood and their understanding is reduced to just learning a few buzzwords such as hyperplanes, kernels, support vectors, etc. This post is an attempt to unroll the mystery that SVMs tend to become for beginners in ML.

Introduction – Hyperplane and Margin

An SVM performs two class classification by building a classifier based on a training set, making it a supervised algorithm. The training set points have features and their class label. An SVM aims to find a separating boundary from which the distances of all the training points are as large as possible. This boundary is what is called the optimal hyperplane, while the distances give an idea about the margin. Notice the word optimal used with hyperplane – its easy to imagine that there will be a lot of boundaries that can separate the data points of the 2 classes, but the fact that SVM finds the one located as far as possible from the data points of either class makes it optimal. The figure below illustrates this important concept about SVMs.

The points plotted in the figure are feature vectors for 40 speech files belonging to 2 emotion classes – happy and sad (20 each). The feature vectors have been projected to a 2D space from their high dimensional space by applying principal component analysis (PCA) for visualization purposes. You may be having many questions after reading this – from where these data points are coming, why PCA has been used, what are the 2 axes, etc. Don’t worry too much. Just understand we have a dataset of points from 2 classes (happy and sad) which we want to separate. In the figure above, while the pink, cyan and brown lines are also separating the data points of these 2 classes, its the blue line which is the optimal separating hyperplane.

We move ahead by explaining the concept of margin. Margin is the distance between the optimal hyperplane and the training data point closest to the hyperplane. This distance, when taken on both the sides of the hyperplane, forms a region where no data point will ever lie. SVM intends to make this region as wide as possible and the selection of the optimal hyperplane is such that it aids in fulfilling this target.

If it were to choose a hyperplane which is quite close to any of the data point, the margin would have been small.

Thus, an SVM builds a classifier by searching for a separating hyperplane which is optimal and maximizes the margin.

Its time to delve into the mathematics behind the above keywords. Firstly, lets clear the air about what a hyperplane is. In the figures above, we saw our separating hyperplane to be a line. Then why did we call it a plane at all, moreover a hyperplane? In essence, a hyperplane is just a fancy word for a plane. It becomes a point for 1 dimensional data points, a line when data points are of 2 dimensions, a plane in 3 dimensions and is called a hyperplane if dimensionality exceeds 3.

The equation of a hyperplane is

(1)

where and

are both vectors.

is actually the dot product of

and

, thus

and

are interchangeable.

Considering the case of 2 dimensional data points, implying every data point is of the form

, putting

=

gives us

The right hand side of the above equation is the equation of a line with slope ‘m’. The above formulation is to convince the reader that in 2 dimensions, a hyperplane is essentially a line.

Adding ‘b’ to both sides of the equation

Again, the RHS of the equation is the equation of a line with slope ‘m’ and intercept ‘b’. The equation of a hyperplane when written like in (1) (vectorized form) should provide you the intuition that points with more than 2 dimensions can also be easily dealt with.

The vector is an important one. From the definition of a hyperplane,

is the normal to the hyperplane. Keeping this in mind, we will now determine the margin

of a hyperplane (not to be confused with slope ‘m’ written earlier). That will give us a very nice understanding of the optimization that an SVM performs.

Problem Formulation

We consider a dataset containing points, denoted as

and each

has a label

, which is either +1 or -1. The label can be anything, but lets consider +1 and -1 for understanding the concept. Consider 3 separating hyperplanes HP0, HP1 and HP2 as follows

HP1 and HP2 are no ordinary hyperplanes; as per our objective of having a margin devoid of any data points lying inside it, HP1 and HP2 are selected such that for each data point

if , then

or

if , then

By introducing above constraints, we have assured that the region between HP1 and HP2 will have no data points in it. These 2 constraints can be concisely written as one as follows

Having selected the above constraint obeying hyerplanes, next in line is the task of maximizing the distance (i.e.,the margin) between HP1 and HP2. Look at the figure shown below. Lets consider a point

lying on the plane HP1. Notice that HP0 is equidistant from HP1 and HP2. If we had a point

that lies on HP2 ,we could simply calculate the distance between

and

and that would have been all. You might think that

can be obtained by simply adding

to

, but remember, that while

is a vector,

is a scalar, and thus the two can’t be added. By looking at figure below, lets think about what we need in order to get

.

Point can also be seen as a vector from the origin to the

(as shown in figure below). Similarly, we can have a vector beginning from the origin to

. From the discussion above, we know this much that we require a new vector with magnitude

, the distance between HP1 and HP2. Lets call this vector

. Being a vector,

also requires a direction and we make

have the same direction as

, the normal to HP2. Then

on addition to

will give us a point that lies on HP2 as shown below (triangle law of vector addition).

Direction of . Thus

Now, . We have obtained a point that lies on HP2. Now since

lies on HP2, it satisfies the equation of HP2 (

). Thus

Since lies on HP1, thus

.

You must realize we have landed at a very important result here. Maximizing margin is the same as minimizing ||w||, the norm of the vector

. Thus, we now have a formulation for the constrained optimization problem that an SVM solves.

minimize

subject to for all i

Primal and Dual

The above formulation is known as the primal optimization problem for an SVM. What is actually solved when determining the maximum margin classifier is known as the dual of the primal problem. The dual is solved by using the Lagrange multipliers method. The motivation behind solving the dual in place of the primal is that by solving the dual, the algorithm can be easily written in terms of the dot products between the points in the input data set, a property which becomes very useful for the kernel trick (introduced later). Lets see what the dual formulation looks like.

subject to

Seems overwhelming? Don’t bother about its complexity and just take away the crucial points explained next. Here, are the Lagrange multipliers. Notice the term

, which is the dot product of 2 input data points,

and

. Also notice that while the primal was a minimization problem, the dual is a maximization one. Thus we have obtained a maximization problem in which the parameters are the

and we are dealing completely with the dot products between the data points. The benefit in this comes from the fact that dot products are very fast to evaluate. This dot product formulation comes in handy further too when kernels (introduced later) are used. Another interesting point is that the constraint

will be true only for a small number of points, the ones which lie closest to the decision boundary. These points are known as the support vectors, and for making any future predictions for a new test data point

, we will require only the inner products between

and these few support vectors rather than the entire training set for determining which class the point

belongs to. The points surrounded by a blue rectangle (nearest to the decision boundary) are the support vectors in our 2 class case.

Linearly Non-Separable Data – Soft Margin & Kernels

Till now, we have very conveniently assumed our training set to be linearly separable. However, if the 2 classes are not linearly separable, or are somehow linearly separable but the resulting margin is very narrow, we have the concept of soft margin in SVMs. It often happens that by violating the constraints for a few points, we get a better margin for the separating hyperplane rather than by trying to fit a strictly separating hyperplane, as in figure shown below. If the constraints are strictly followed for all the points in this case, then we can see that the resulting separating hyperplane has a very small margin.

Thus, sometimes by allowing a few mistakes while partitioning the training data, we can get a better margin.

At the same time, the SVM applies a penalty on the points for which the constraint are being violated.

Looking at the equation, we can see that will be 0 for data points on correct side of the margin, while it will be a positive number for data points on the wrong side. This alters our minimization problem from before to

minimize

subject to for all i

The variable is the regularization parameter and is actually determining the trade off between maximizing the margin and ensuring that all training data points lie on the correct side of the margin. If

is small, then we are effectually making the total regularization term

small in the optimization function and we get a large margin. The opposite becomes true if

chosen is large.

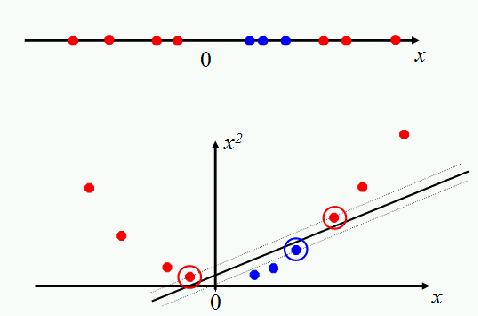

Another way of SVMs to deal with the case of non-linearly separable data points is the kernel trick. The idea stems from the fact that if the data cannot be partitioned by a linear boundary in its current dimensionality, then projecting the data into a higher dimensional space may make it linearly separable, such as in figure shown below (taken from the internet).

When such is the case, there will be a function that will project the data points from their current space to the higher dimensional space, and

will replace

in all above equations. In the figure above, we can see that when the 1 dimensional points were projected to 2 dimensional space (higher dimensional space), they became linearly separable.

Like we mentioned before, we get the benefit of solving the optimization problem in terms of the dot products only here too, as the kernel function can then simply be written as the dot product of the transformed points

and

.

Now whats interesting to note is that often, the kernel is rather easier to calculate than first calculating

and

and then their dot product, since the vectors have now become high dimensional vectors after their projection. This is why the method has been called kernel trick, since our effective implementation of the kernel can allow us to learn in the higher dimensional space even without explicitly performing any projection and determining the vectors

and

.

Conclusion

This blog was an effort to give a comprehensive idea behind the working of SVMs, right from introducing the key concepts, developing intuition about the keywords to the actual problem formulation, albeit with much of the mathematics intentionally skipped. We aimed to cover as many keywords related to SVMs as possible, nevertheless its expected that some important content was missed. Readers are encouraged to delve more into the mathematical formulation and to discuss it with us, and to also let us know about any more SVM concepts they would like to be elaborated upon in the comments. It will motivate us to cover those concepts in future posts.

Acknowledgement

- Alexandre KOWALCZYK’s blog – “SVM Tutorial”. (link)

- Non-Linear SVMs. (link)

- Lecture 2 – The SVM Classifier. (link)

- Andrew Ng, ‘Support Vector Machines’, Part V, CS229 Lecture notes. (link)

If you liked the post, follow this blog to get updates about the upcoming articles. Also, share this article so that it can reach out to the readers who can actually gain from this. All comments and suggestions from all the readers are welcome.

Happy machine learning

Thanks for such a great article. I am new to data science, your article is very useful, finally I got some clarity about SVM.

Like

After reading your article I was amazed. I know that you explain it very well. And I hope that other readers will also experience how I feel after reading your article.

Like